Face2Multimodal

VideoPreview(Youtube)

This website presents Face2Multimodal Demo, powered by BROOK Database.

(Accepted by CHI ‘2020 Workshop Speculative Designs for Emergent Personal Data Trails: Signs, Signals and Signifiers. Link: https://www.emergentdatatrails.com/)

Arxiv: https://arxiv.org/abs/2005.08637

Code Source: https://github.com/unnc-ucc/Face2Multimodal

Introduction

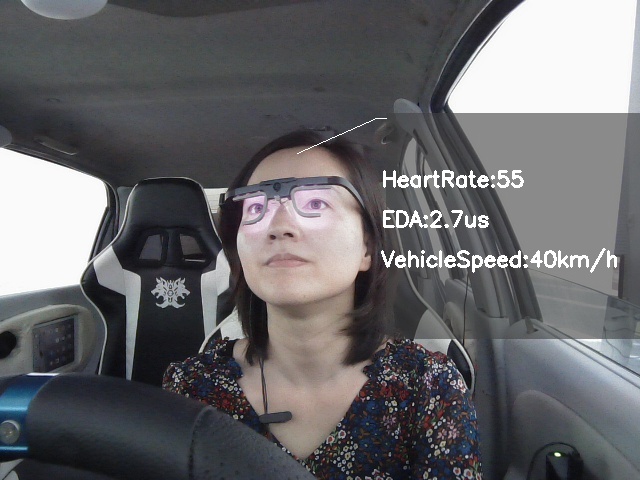

We present an in-vehicle real-time design to estimate driver’s multi-modal states(skin conductance and heart rates) and driving status(speed) through their facial expressions. For each kind of data flows, we apply DenseNet as the model architecture.

Showcase Examples

Hereby, we showcase one example use case: Face-to-Multimodal Predictor, as follow.

How to Setup

- Utilize a face detector (e.g. dlib) to crop the driver’s front face and resize to 224x224 px.

- Load the model under the folder “models” by PyTorch(Version 1.0.0-1.4.0) and set the face as the input.

- Run the model and get the predict result.

- Because predicting results is represented as a class number, some computation is required to get the result:

- For predicting heart rate: result = predict result + 60

- For predicting skin conductance: result = predict result/10.0

- For predicting vehicle speed: result = predict result

Models for Demonstrations

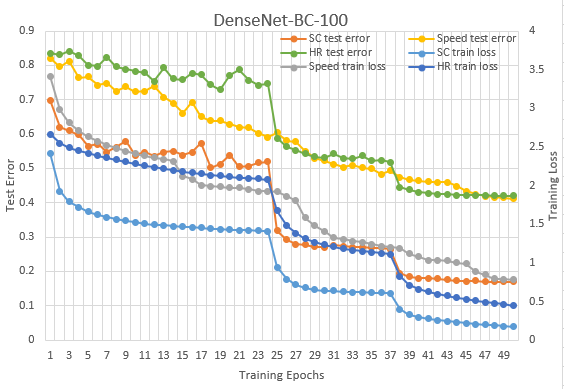

All models are saved under the folder “models”. Sample models of DenseNet-BC-100. Training data contains Heart Rates(per minute), Skin Conductance(uS), Speed (km\h). The training procedure is powered by PyTorch. Further trainings are work-in-progress, we are confident that extensively hyperparameter adjustment would imporve the accuracy.

Accuracy